# Images

This chapter examines the Image class and the java.awt.image package. Together, they provide support for imaging (the display and manipulation of graphical images). An image is simply a rectangular graphical object. Images are a key component of web design. In fact, the inclusion of the tag in the Mosaic browser at NCSA (National Center for Supercomputer Applications) was a catalyst that helped the Web begin to grow explosively in 1993. This tag was used to include an image inline with the flow of hypertext. Java expands upon this basic concept, allowing images to be managed under program control. Because of its importance, Java provides extensive support for imaging.

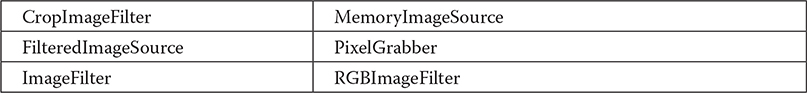

Images are supported by the Image class, which is part of the java.awt package. Images are manipulated using the classes found in the java.awt.image package. There are a large number of imaging classes and interfaces defined by java.awt.image, and it is not possible to examine them all. Instead, we will focus on those that form the foundation of imaging. Here are the java.awt.image classes discussed in this chapter:

The interfaces that we will use are ImageConsumer and ImageProducer.

# File Formats

Originally, web images could only be in GIF format. The GIF image format was created by CompuServe in 1987 to make it possible for images to be viewed while online, so it was well suited to the Internet. GIF images can have only up to 256 colors each. This limitation caused the major browser vendors to add support for JPEG images in 1995. The JPEG format was created by a group of photographic experts to store full-color-spectrum, continuous-tone images. These images, when properly created, can be of much higher fidelity as well as more highly compressed than a GIF encoding of the same source image. Another file format is PNG. It, too, is an alternative to GIF. In almost all cases, you will never care or notice which format is being used in your programs. The Java image classes abstract the differences behind a clean interface.

# Image Fundamentals: Creating, Loading, and Displaying

There are three common operations that occur when you work with images: creating an image, loading an image, and displaying an image. In Java, the Image class is used to refer to images in memory and to images that must be loaded from external sources. Thus, Java provides ways for you to create a new image object and ways to load one. It also provides a means by which an image can be displayed. Let’s look at each.

# Creating an Image Object

You might expect that you create a memory image using something like the following:

Image test = new Image(200, 100); // Error -- won’t work

Not so. Because images must eventually be painted on a window to be seen, the Image class doesn’t have enough information about its environment to create the proper data format for the screen. Therefore, the Component class in java.awt has a factory method called createImage( ) that is used to create Image objects. (Remember that all of the AWT components are subclasses of Component, so all support this method.)

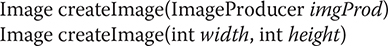

The createImage( ) method has the following two forms:

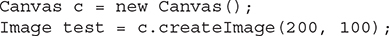

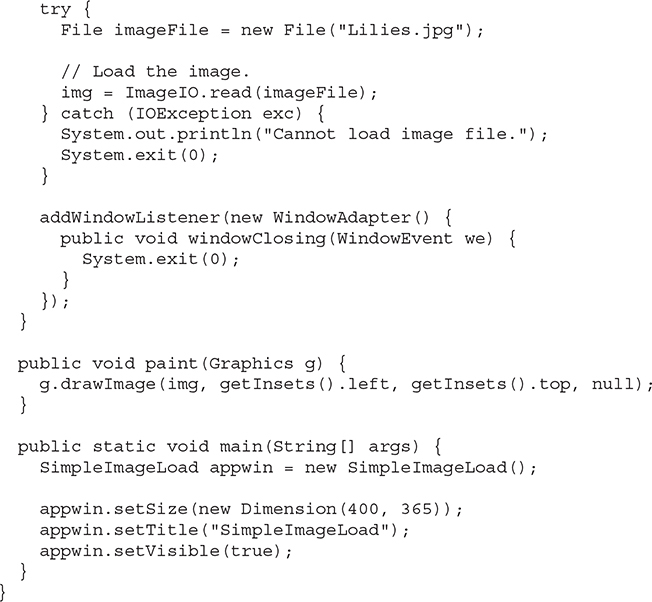

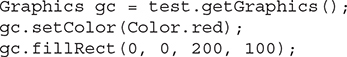

The first form returns an image produced by imgProd, which is an object of a class that implements the ImageProducer interface. (We will look at image producers later.) The second form returns a blank (that is, empty) image that has the specified width and height. Here is an example:

This creates an instance of Canvas and then calls the createImage( ) method to actually make an Image object. At this point, the image is blank. Later, you will see how to write data to it.

# Loading an Image

Another way to obtain an image is to load one, either from a file on the local file system or from a URL. Here, we will use the local file system. The easiest way to load an image is to use one of the static methods defined by the ImageIO class. ImageIO provides extensive support for reading and writing images. It is packaged in javax.imageio, and beginning with JDK 9, javax.imageio is part of the java.desktop module. The method that loads an image is called read( ). The form we will use is shown here:

static BufferedImage read(File imageFile) throws IOException

Here, imageFile specifies the file that contains the image. It returns a reference to the image in the form of a BufferedImage, which is a subclass of Image that includes a buffer. Null is returned if the file does not contain a valid image.

# Displaying an Image

Once you have an image, you can display it by using drawImage( ), which is a member of the Graphics class. It has several forms. The one we will be using is shown here:

boolean drawImage(Image imgObj, int left, int top, ImageObserver imgOb)

This displays the image passed in imgObj with its upper-left corner specified by left and top. imgOb is a reference to a class that implements the ImageObserver interface. This interface is implemented by all AWT (and Swing) components. An image observer is an object that can monitor an image while it loads. When no image observer is needed, imgOb can be null.

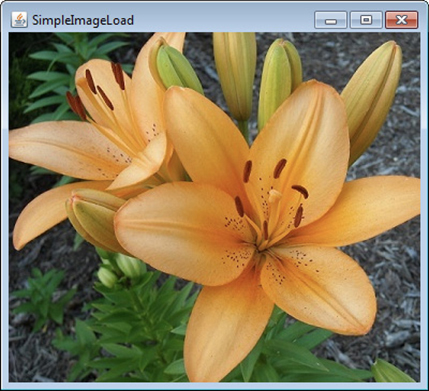

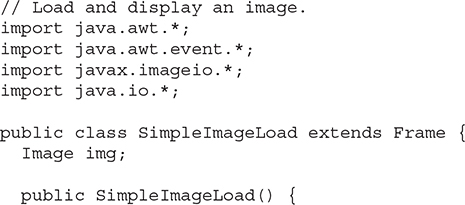

Using read( ) and drawImage( ), it is actually quite easy to load and display an image. Here is a program that loads and displays a single image. The file Lilies.jpg is loaded, but you can substitute any image you like (just make sure it is available in the same directory as the program). Sample output is shown in Figure 28-1.

Figure 28-1 Sample output from SimpleImageLoad

# Double Buffering

Not only are images useful for storing pictures, as we’ve just shown, but you can also use them as offscreen drawing surfaces. This allows you to render any image, including text and graphics, to an offscreen buffer that you can display at a later time. The advantage to doing this is that the image is seen only when it is complete. Drawing a complicated image could take several milliseconds or more, which can be seen by the user as flashing or flickering. This flashing is distracting and causes the user to perceive your rendering as slower than it actually is. Use of an offscreen image to reduce flicker is called double buffering, because the screen is considered a buffer for pixels, and the offscreen image is the second buffer, where you can prepare pixels for display.

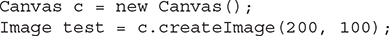

Earlier in this chapter, you saw how to create a blank Image object. Now you will see how to draw on that image rather than the screen. As you recall from earlier chapters, you need a Graphics object in order to use any of Java’s rendering methods. Conveniently, the Graphics object that you can use to draw on an Image is available via the getGraphics( ) method. Here is a code fragment that creates a new image, obtains its graphics context, and fills the entire image with red pixels:

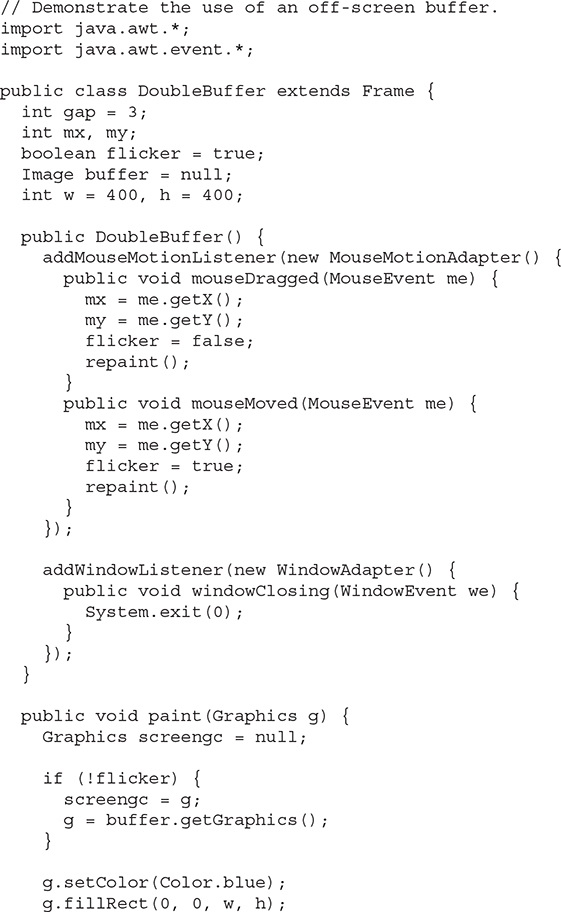

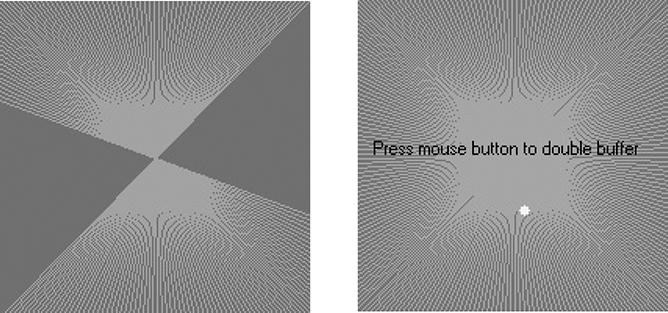

Once you have constructed and filled an offscreen image, it will still not be visible. To actually display the image, call drawImage( ). Here is an example that draws a time-consuming image to demonstrate the difference that double buffering can make in perceived drawing time:

This simple program has a complicated paint( ) method. It fills the background with blue and then draws a red moiré pattern on top of that. It paints some black text on top of that and then paints a yellow circle centered at the coordinates mx, my. The mouseMoved( ) and mouseDragged( ) methods are overridden to track the mouse position. These methods are identical, except for the setting of the flicker Boolean variable. mouseMoved( ) sets flicker to true, and mouseDragged( ) sets it to false. This has the effect of calling repaint( ) with flicker set to true when the mouse is moved (but no button is pressed) and set to false when the mouse is dragged with any button pressed.

When paint( ) gets called with flicker set to true, we see each drawing operation as it is executed on the screen. In the case where a mouse button is pressed and paint( ) is called with flicker set to false, we see quite a different picture. The paint( ) method swaps the Graphics reference g with the graphics context that refers to the offscreen canvas, buffer, which we created in main( ). Then all of the drawing operations are invisible. At the end of paint( ), we simply call drawImage( ) to show the results of these drawing methods all at once.

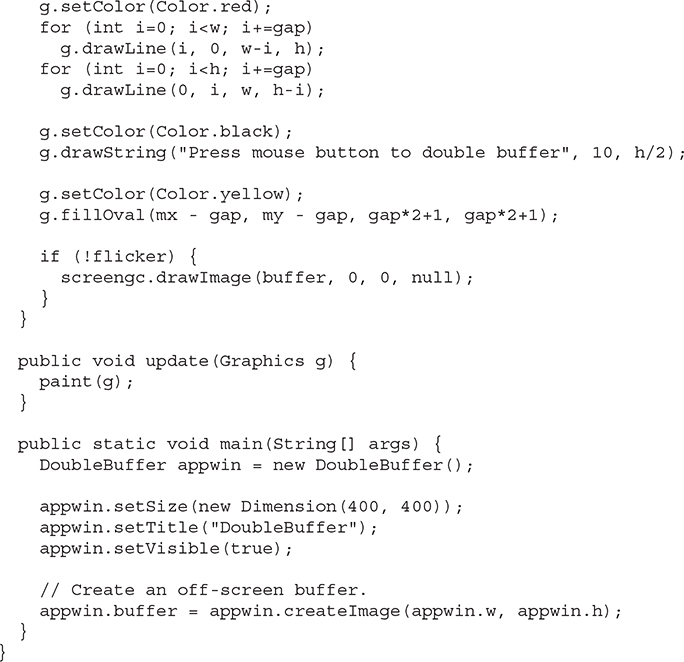

Sample output is shown in Figure 28-2. The left snapshot is what the screen looks like with the mouse button not pressed. As you can see, the image was in the middle of repainting when this snapshot was taken. The right snapshot shows how, when a mouse button is pressed, the image is always complete and clean due to double buffering.

Figure 28-2 Output from DoubleBuffer without (left) and with (right) double buffering

# ImageProducer

ImageProducer is an interface for objects that want to produce data for images. An object that implements the ImageProducer interface will supply integer or byte arrays that represent image data and produce Image objects. As you saw earlier, one form of the createImage( ) method takes an ImageProducer object as its argument. There are two image producers contained in java.awt.image: MemoryImageSource and FilteredImageSource. Here, we will examine MemoryImageSource and create a new Image object from generated data.

# MemoryImageSource

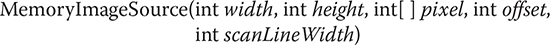

MemoryImageSource is a class that creates a new Image from an array of data. It defines several constructors. Here is the one we will be using:

The MemoryImageSource object is constructed out of the array of integers specified by pixel, in the default RGB color model to produce data for an Image object. In the default color model, a pixel is an integer with Alpha, Red, Green, and Blue (0xAARRGGBB). The Alpha value represents a degree of transparency for the pixel. Fully transparent is 0, and fully opaque is 255. The width and height of the resulting image are passed in width and height. The starting point in the pixel array to begin reading data is passed in offset. The width of a scan line (which is often the same as the width of the image) is passed in scanLineWidth.

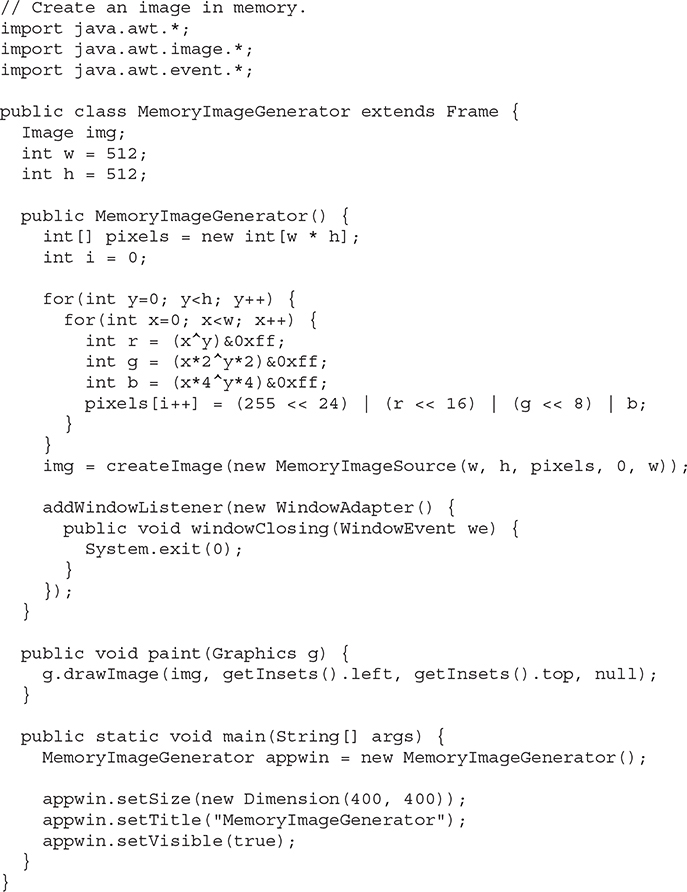

The following short example generates a MemoryImageSource object using a variation on a simple algorithm (a bitwise-exclusive-OR of the x and y address of each pixel) from the book Beyond Photography: The Digital Darkroom by Gerard J. Holzmann (Prentice Hall, 1988).

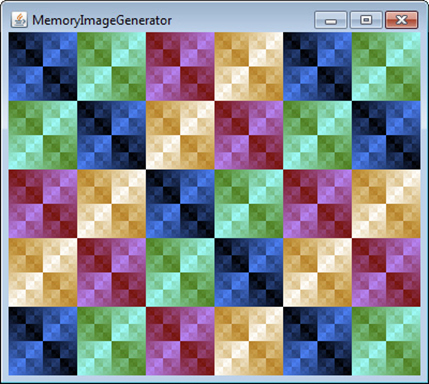

The data for the new MemoryImageSource is created in the constructor. An array of integers is created to hold the pixel values; the data is generated in the nested for loops where the r, g, and b values get shifted into a pixel in the pixels array. Finally, createImage( ) is called with a new instance of a MemoryImageSource created from the raw pixel data as its parameter. Figure 28-3 shows the image.

Figure 28-3 Sample output from MemoryImageGenerator

# ImageConsumer

ImageConsumer is an interface for objects that want to take pixel data from images and supply it as another kind of data. This, obviously, is the opposite of ImageProducer, described earlier. An object that implements the ImageConsumer interface is going to create int or byte arrays that represent pixels from an Image object. We will examine the PixelGrabber class, which is a simple implementation of the ImageConsumer interface.

# PixelGrabber

The PixelGrabber class is defined within java.lang.image. It is the inverse of the MemoryImageSource class. Rather than constructing an image from an array of pixel values, it takes an existing image and grabs the pixel array from it. To use PixelGrabber, you first create an array of ints big enough to hold the pixel data, and then you create a PixelGrabber instance passing in the rectangle that you want to grab. Finally, you call grabPixels( ) on that instance.

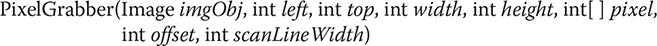

The PixelGrabber constructor that is used in this chapter is shown here:

Here, imgObj is the object whose pixels are being grabbed. The values of left and top specify the upper-left corner of the rectangle, and width and height specify the dimensions of the rectangle from which the pixels will be obtained. The pixels will be stored in pixel beginning at offset. The width of a scan line (which is often the same as the width of the image) is passed in scanLineWidth.

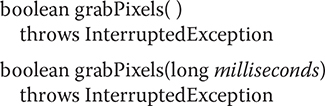

grabPixels( ) is defined like this:

Both methods return true if successful and false otherwise. In the second form, milliseconds specifies how long the method will wait for the pixels. Both throw InterruptedException if execution is interrupted by another thread.

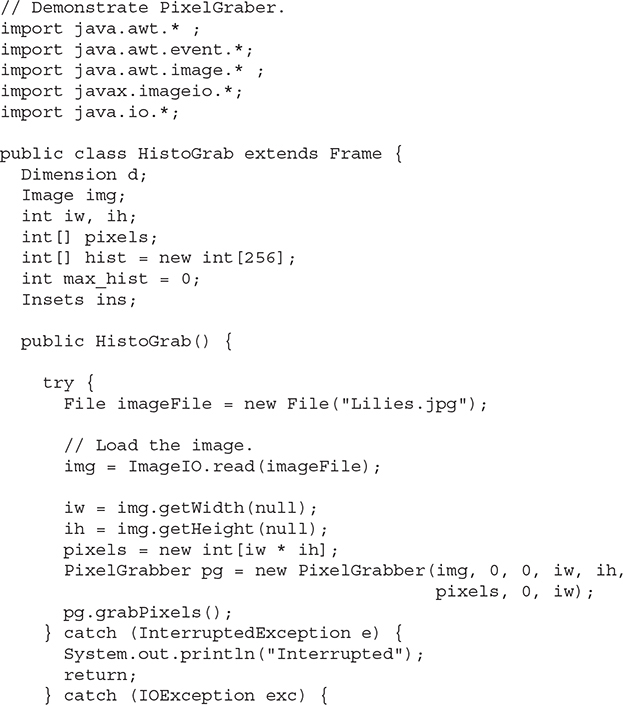

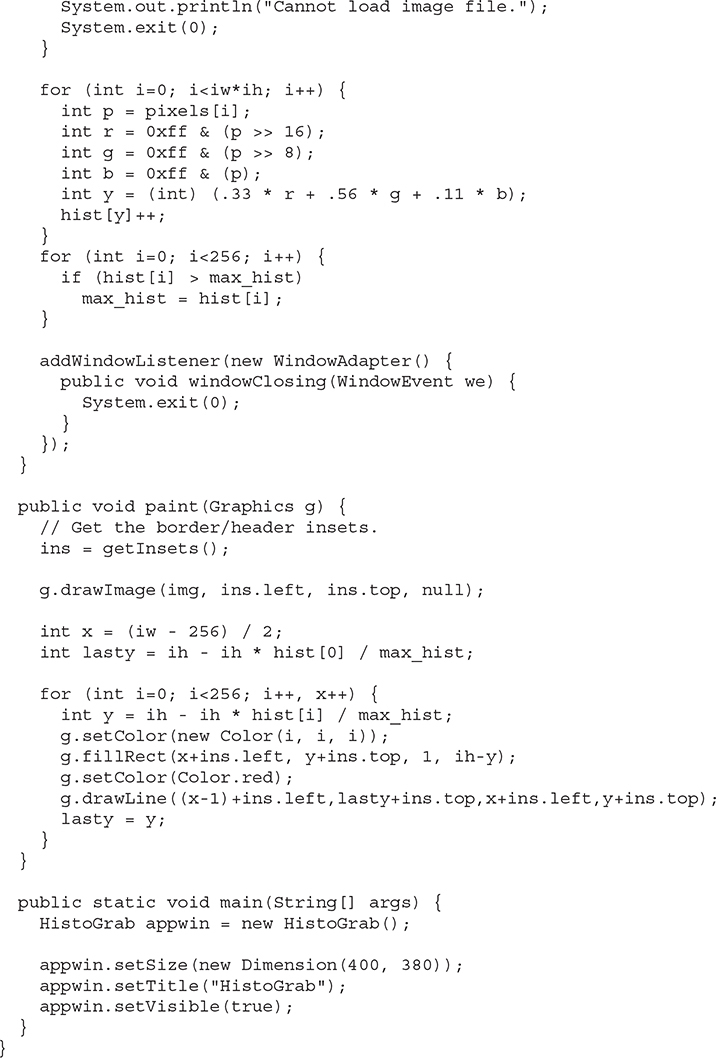

Here is an example that grabs the pixels from an image and then creates a histogram of pixel brightness. The histogram is simply a count of pixels that are a certain brightness for all brightness settings between 0 and 255. After the program paints the image, it draws the histogram over the top.

Figure 28-4 shows a sample image and its histogram.

Figure 28-4 Sample output from HistoGrab

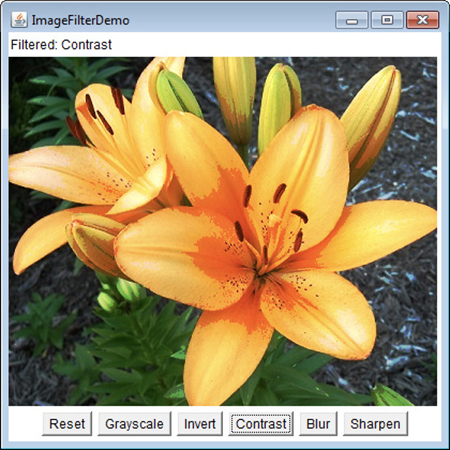

# ImageFilter

Given the ImageProducer and ImageConsumer interface pair—and their concrete classes MemoryImageSource and PixelGrabber—you can create an arbitrary set of translation filters that takes a source of pixels, modifies them, and passes them on to an arbitrary consumer. This mechanism is analogous to the way concrete classes are created from the abstract I/O classes InputStream, OutputStream, Reader, and Writer (described in Chapter 22). This stream model for images is completed by the introduction of the ImageFilter class. Some subclasses of ImageFilter in the java.awt.image package are AreaAveragingScaleFilter, CropImageFilter, ReplicateScaleFilter, and RGBImageFilter. There is also an implementation of ImageProducer called FilteredImageSource, which takes an arbitrary ImageFilter and wraps it around an ImageProducer to filter the pixels it produces. An instance of FilteredImageSource can be used as an ImageProducer in calls to createImage( ), in much the same way that BufferedInputStreams can be used as InputStreams.

In this chapter, we examine two filters: CropImageFilter and RGBImageFilter.

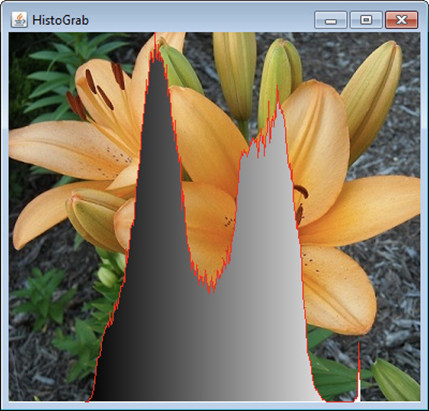

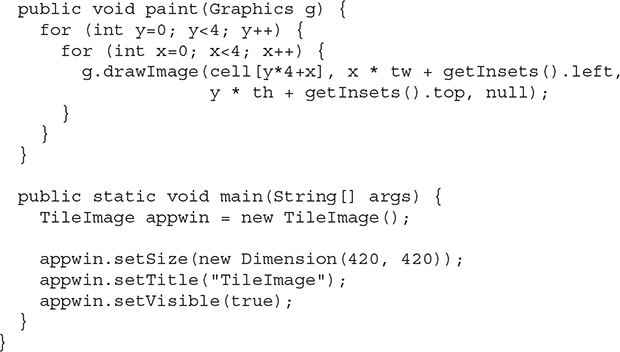

# CropImageFilter

CropImageFilter filters an image source to extract a rectangular region. One situation in which this filter is valuable is where you want to use several small images from a single, larger source image. Loading twenty 2K images takes much longer than loading a single 40K image that has many frames of an animation tiled into it. If every subimage is the same size, then you can easily extract these images by using CropImageFilter to disassemble the block once your program starts. Here is an example that creates 16 images taken from a single image. The tiles are then scrambled by swapping a random pair from the 16 images 32 times.

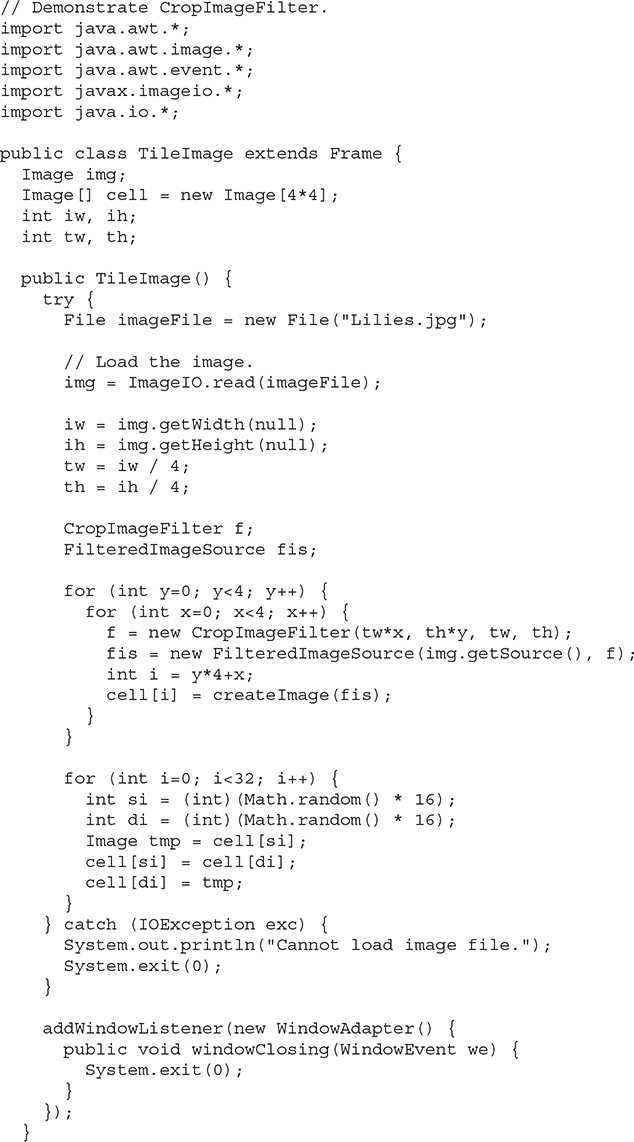

Figure 28-5 shows the flowers image scrambled by TileImage.

Figure 28-5 Sample output from TileImage

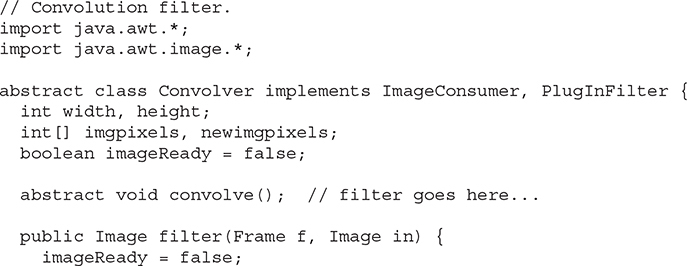

# RGBImageFilter

The RGBImageFilter is used to convert one image to another, pixel by pixel, transforming the colors along the way. This filter could be used to brighten an image, to increase its contrast, or even to convert it to grayscale.

To demonstrate RGBImageFilter, we have developed a somewhat complicated example that employs a dynamic plug-in strategy for image-processing filters. We’ve created an interface for generalized image filtering so that a program can simply load these filters at run time without having to know about all of the ImageFilters in advance. This example consists of the main class called ImageFilterDemo, the interface called PlugInFilter, and a utility class called LoadedImage. Also included are three filters—Grayscale, Invert, and Contrast—that simply manipulate the color space of the source image using RGBImageFilters, and two more classes—Blur and Sharpen—that do more complicated "convolution" filters that change pixel data based on the pixels surrounding each pixel of source data. Blur and Sharpen are subclasses of an abstract helper class called Convolver. Let’s look at each part of our example.

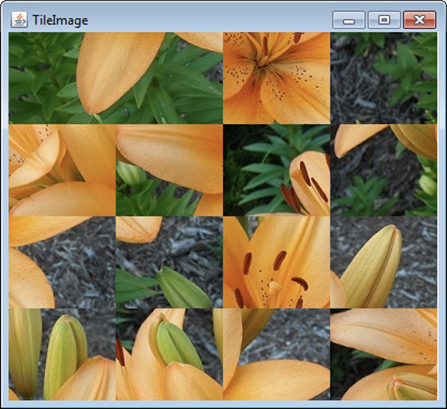

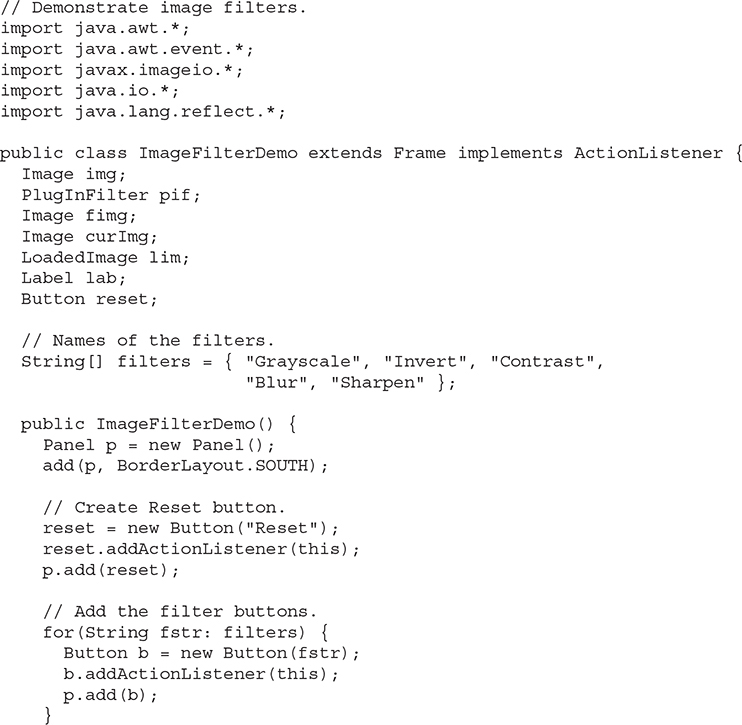

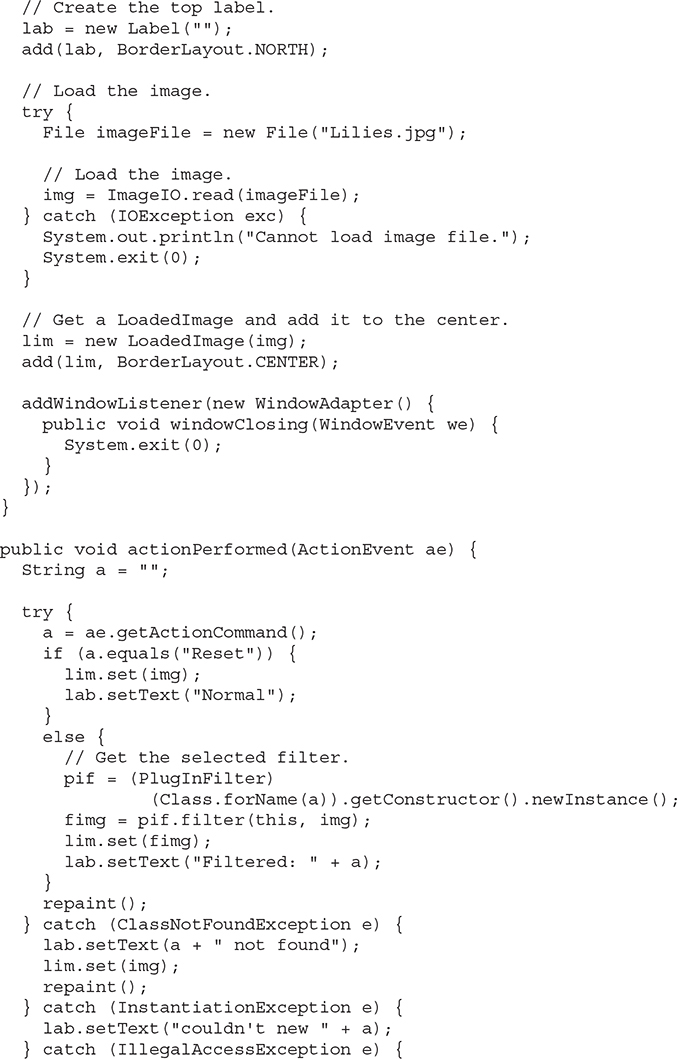

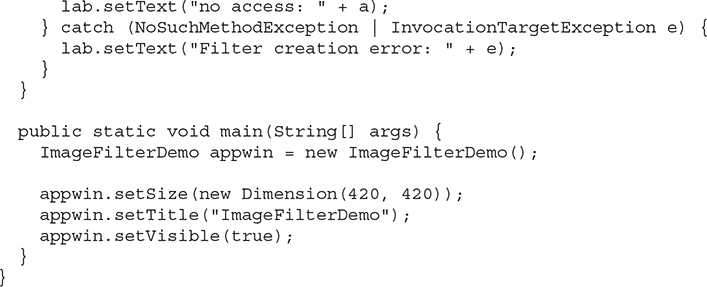

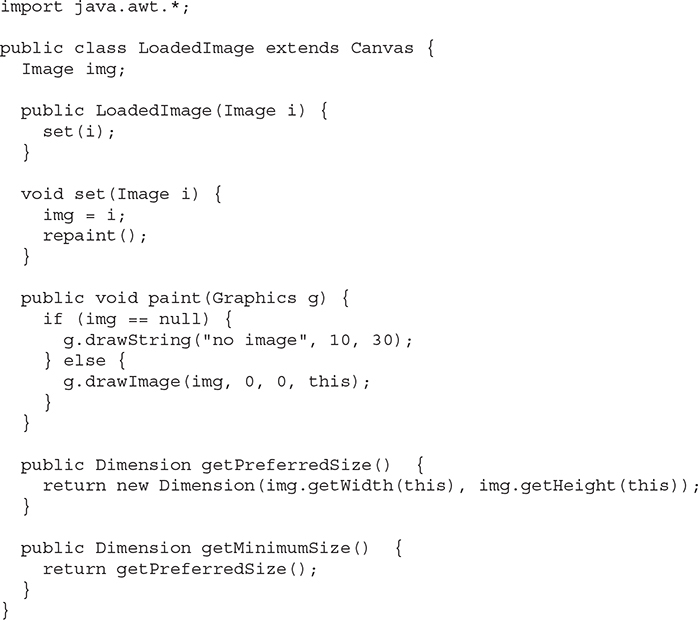

# ImageFilterDemo.java

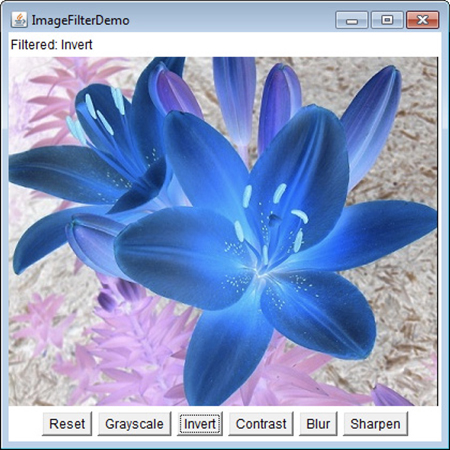

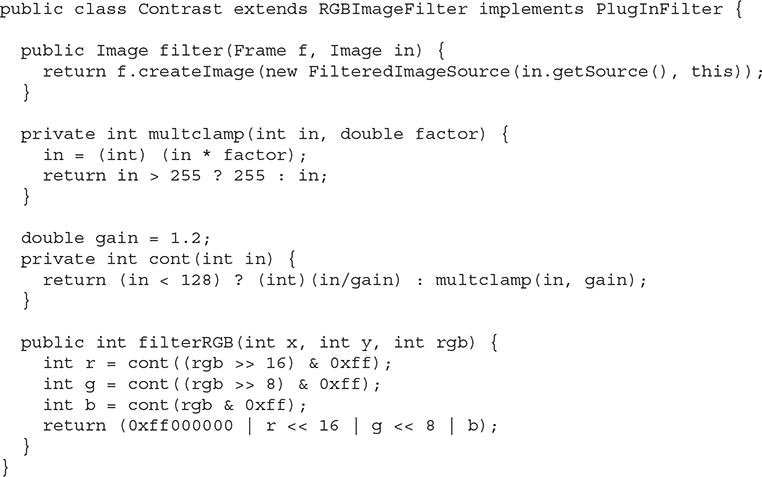

The ImageFilterDemo class is the main class for the sample image filters. It employs the default BorderLayout, with a Panel at the South position to hold the buttons that will represent each filter. A Label object occupies the North slot for informational messages about filter progress. The Center is where the image (which is encapsulated in the LoadedImage Canvas subclass, described later) is put.

The actionPerformed( ) method is interesting because it uses the label from a button as the name of a filter class that it loads. This method is robust and takes appropriate action if the button does not correspond to a proper class that implements PlugInFilter.

Figure 28-6 shows what the program looks like when it is first loaded.

Figure 28-6 Sample normal output from ImageFilterDemo

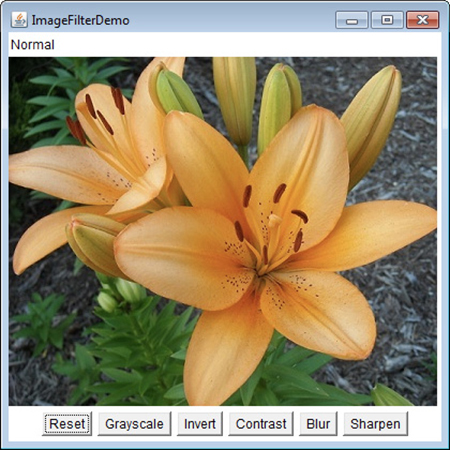

# PlugInFilter.java

PlugInFilter is a simple interface used to abstract image filtering. It has only one method, filter( ), which takes the frame and the source image and returns a new image that has been filtered in some way.

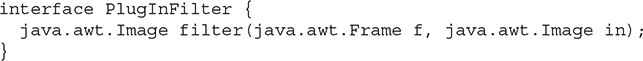

# LoadedImage.java

LoadedImage is a convenient subclass of Canvas. It behaves properly under layout manager control because it overrides the getPreferredSize( ) and getMinimumSize( ) methods. Also, it has a method called set( ) that can be used to set a new Image to be displayed in this Canvas. That is how the filtered image is displayed after the plug-in is finished.

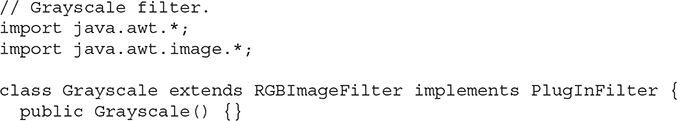

# Grayscale.java

The Grayscale filter is a subclass of RGBImageFilter, which means that Grayscale can use itself as the ImageFilter parameter to FilteredImageSource’s constructor. Then all it needs to do is override filterRGB( ) to change the incoming color values. It takes the red, green, and blue values and computes the brightness of the pixel, using the NTSC (National Television Standards Committee) color-to-brightness conversion factor. It then simply returns a gray pixel that is the same brightness as the color source.

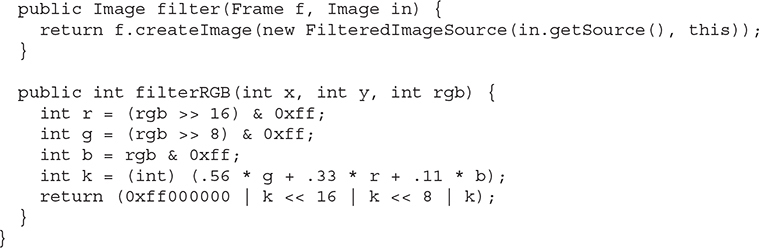

# Invert.java

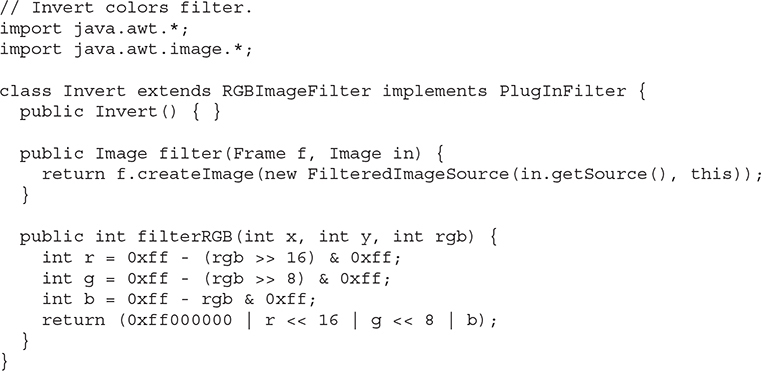

The Invert filter is also quite simple. It takes apart the red, green, and blue values and then inverts them by subtracting them from 255. These inverted values are packed back into a pixel value and returned.

Figure 28-7 shows the image after it has been run through the Invert filter.

Figure 28-7 Using the Invert filter with ImageFilterDemo

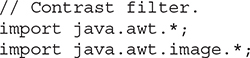

# Contrast.java

The Contrast filter is very similar to Grayscale, except its override of filterRGB( ) is slightly more complicated. The algorithm it uses for contrast enhancement takes the red, green, and blue values separately and boosts them by 1.2 times if they are already brighter than 128. If they are below 128, then they are divided by 1.2. The boosted values are properly clamped at 255 by the multclamp( ) method.

Figure 28-8 shows the image after Contrast is pressed.

Figure 28-8 Using the Contrast filter with ImageFilterDemo

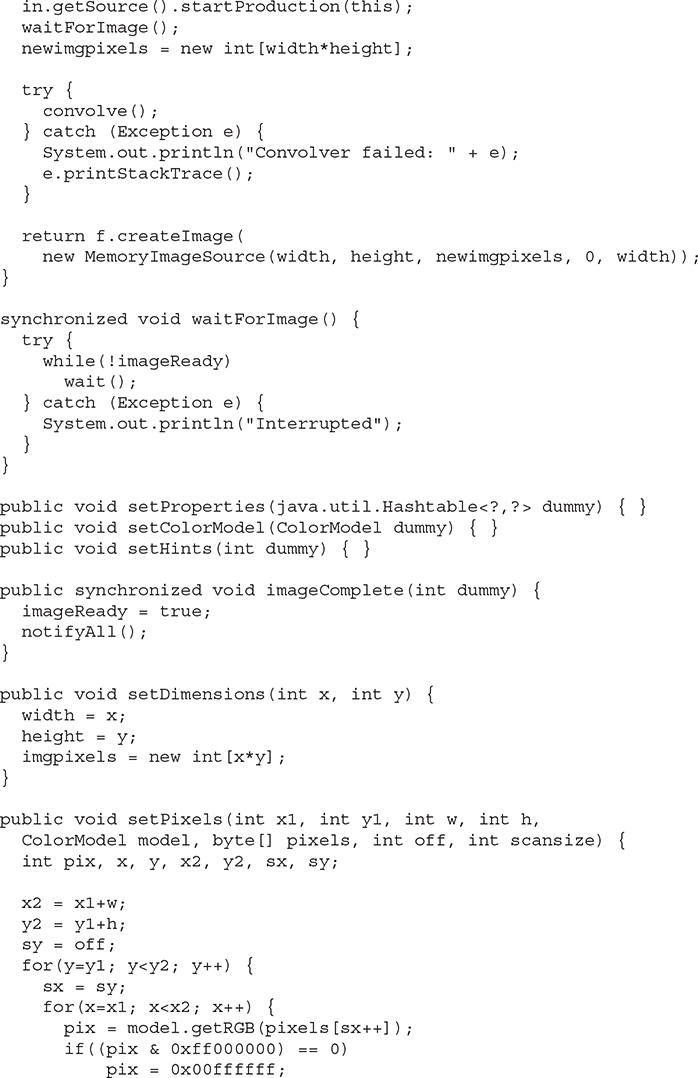

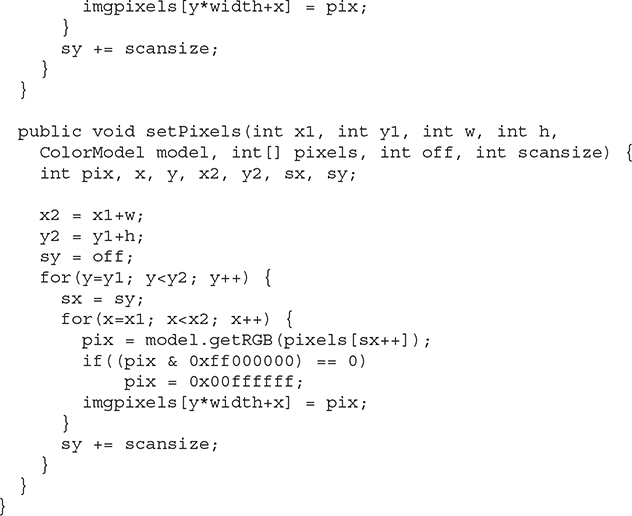

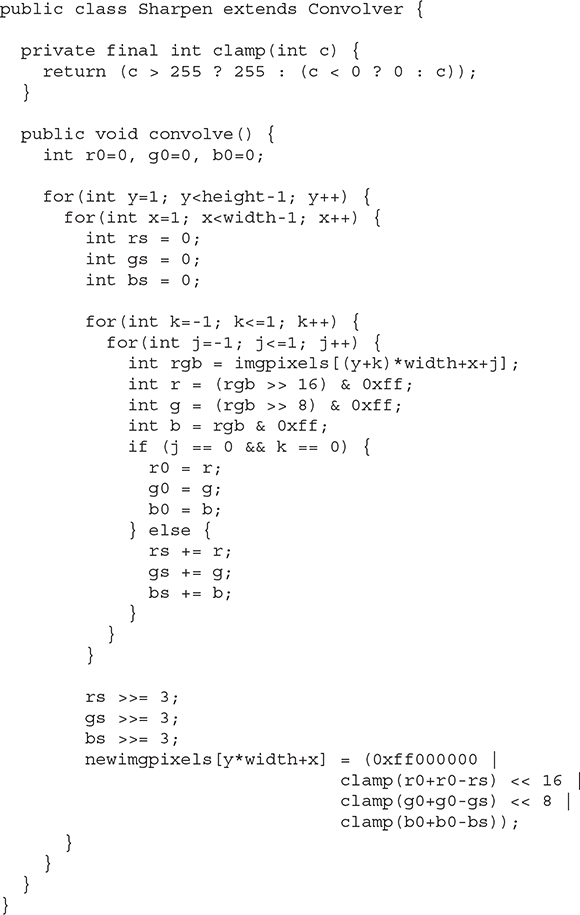

# Convolver.java

The abstract class Convolver handles the basics of a convolution filter by implementing the ImageConsumer interface to move the source pixels into an array called imgpixels. It also creates a second array called newimgpixels for the filtered data. Convolution filters sample a small rectangle of pixels around each pixel in an image, called the convolution kernel. This area, 3×3 pixels in this demo, is used to decide how to change the center pixel in the area.

NOTE The reason that the filter can’t modify the imgpixels array in place is that the next pixel on a scan line would try to use the original value for the previous pixel, which would have just been filtered away.

The two concrete subclasses, shown in the next section, simply implement the convolve( ) method, using imgpixels for source data and newimgpixels to store the result.

NOTE A built-in convolution filter called ConvolveOp is provided by java.awt.image. You may want to explore its capabilities on your own.

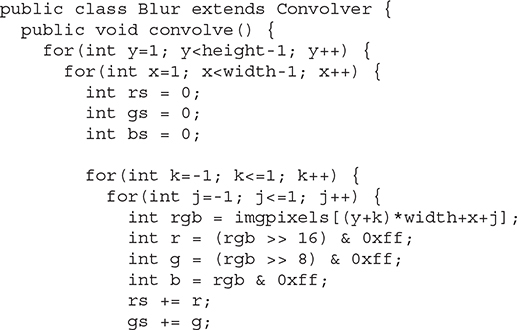

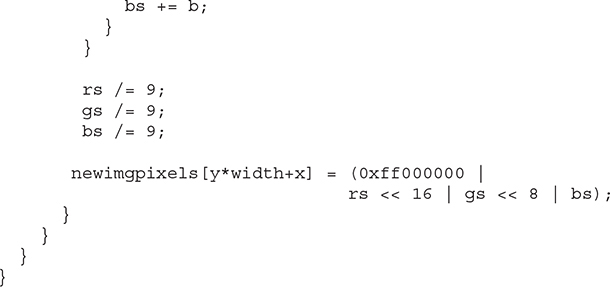

# Blur.java

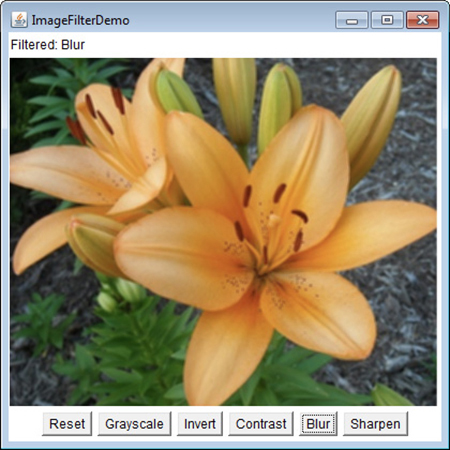

The Blur filter is a subclass of Convolver and simply runs through every pixel in the source image array, imgpixels, and computes the average of the 3×3 box surrounding it. The corresponding output pixel in newimgpixels is that average value.

Figure 28-9 shows the image after Blur.

Figure 28-9 Using the Blur filter with ImageFilterDemo

# Sharpen.java

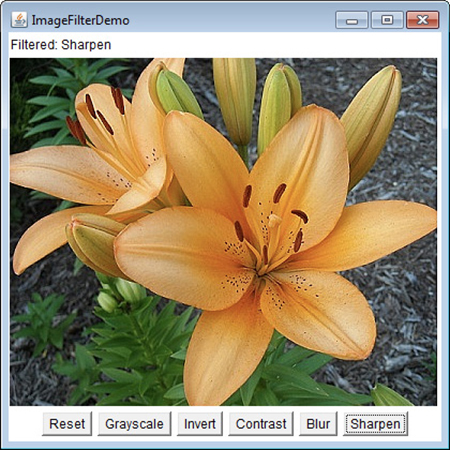

The Sharpen filter is also a subclass of Convolver and is (more or less) the inverse of Blur. It runs through every pixel in the source image array, imgpixels, and computes the average of the 3×3 box surrounding it, not counting the center. The corresponding output pixel in newimgpixels has the difference between the center pixel and the surrounding average added to it. This basically says that if a pixel is 30 brighter than its surroundings, make it another 30 brighter. If, however, it is 10 darker, then make it another 10 darker. This tends to accentuate edges while leaving smooth areas unchanged.

Figure 28-10 shows the image after Sharpen.

Figure 28-10 Using the Sharpen filter with ImageFilterDemo

# Additional Imaging Classes

In addition to the imaging classes described in this chapter, java.awt.image supplies several others that offer enhanced control over the imaging process and that support advanced imaging techniques. Also available is the imaging package called javax.imageio. It supports reading and writing images, and has plug-ins that handle various image formats. If sophisticated graphical output is of special interest to you, then you will want to explore the additional classes found in java.awt.image and javax.imageio.